Unit 1: AI Foundation

Comprehensive Overview of Key AI Foundational Concepts: History of AI, Machine Learning, & Neural Networks

What is Artificial Intelligence?

Artificial intelligence (AI) refers to computer systems that can perform tasks that typically require human intelligence, such as visual perception, speech recognition, and decision-making. AI is accomplished by using algorithms and machine learning techniques that allow software systems to improve through experience and new data. Key categories of AI include computer vision, natural language processing, robotics, and expert systems for complex problem-solving and planning.

How Does it Work?

Artificial intelligence systems use machine learning algorithms to analyze data, identify patterns and make predictions or decisions without being explicitly programmed to do so. The algorithms "learn" by being trained on large datasets, adapting their neural network models and parameters through experience to become better at the desired tasks. This ability to learn from data and improve through practice allows AI systems to exhibit intelligent behavior and perform human-like cognitive functions such as computer vision, speech recognition, and language translation.

You might be using Artificial Intelligence with even knowing it.

Siri, an apple product that is on most apple i-phones, i-pads, and computers is an example of Artificial Intelligence.

ChatGPT is another examples of Artificial Intelligence that most students have at least heard of.

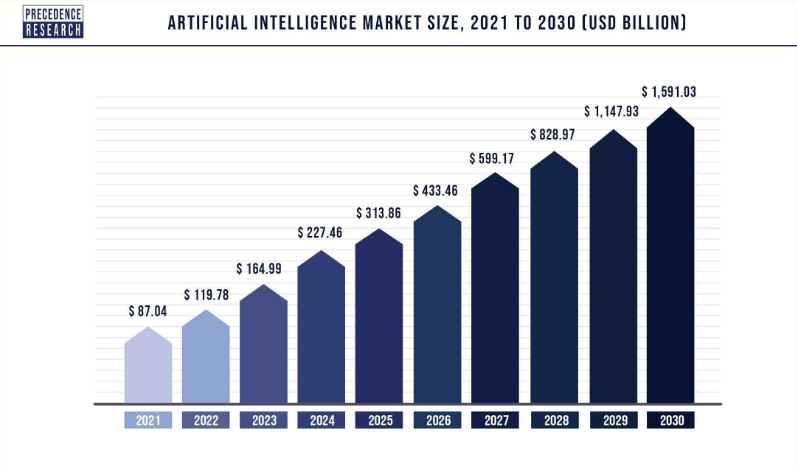

Artificial Intelligence is a growth industry.

Artificial intelligence is advancing at an exponential pace that is outpacing human comprehension. The speed and scale at which AI systems can ingest data and learn complex patterns through techniques like deep learning is unprecedented.

Within short periods, AI models can analyze millions of examples and teach themselves to make inferences and predictions that no human could match. The inner workings of these powerful systems are often black boxes, with logic hidden within multi-layer neural networks.

To understand the true power that AI has watch the video above.

The history of Artificial Intelligence.

Alan Turing first proposed the idea of the Turing Test in his 1950 paper to evaluate if machines can demonstrate human-like intelligence based solely on their ability to communicate. The test involves an evaluator having text conversations with a human and a machine while not knowing which is which and then judging if the machine’s responses seem human. The Turing Test established a milestone in artificial intelligence by defining a benchmark focused on computers exhibiting cognitive abilities on par with humans, like reasoning and natural language processing.

Google employs artificial neural networks to improve search results. These neural networks are inspired by the human brain's architecture. By training these large neural network models on massive datasets, Google can enhance relevance of search results as the systems learn predicting user intent behind queries.

AI models like GPT-3 showcase remarkable advances in language processing capabilities. With immense parameter sizes, GPT-3 leverages vast datasets to model the nuances and complexity behind textual communication. By optimizing such large neural networks, levels of language understanding, reasoning, and production can be achieved that were previously unattainable by past algorithms.

Artificial Intelligence advancement has been accelerating rapidly as of late. Major technology companies are heavily investing in AI research to drive innovation of transformational systems and capabilities. With the exponential pace of discoveries and applications unfolding across domains, the future trajectories of AI are proving ever-more challenging to anticipate.

How Machines Work?

Part One: Neural Networks

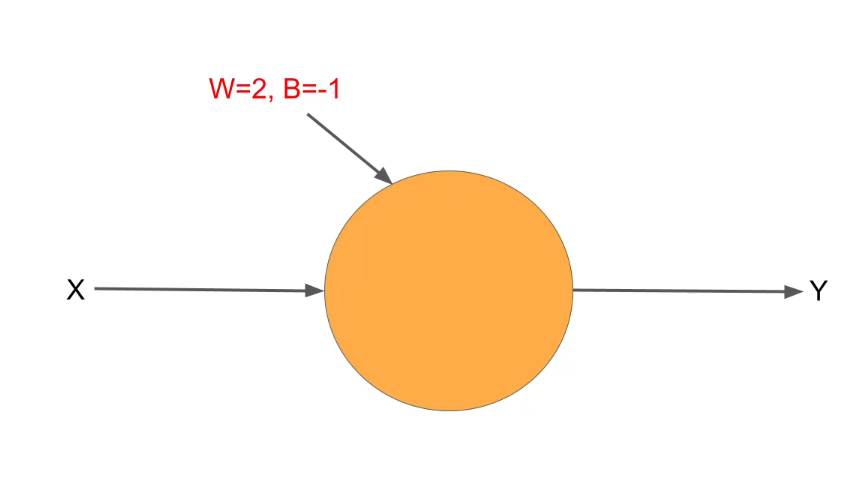

Neural networks are computing systems inspired by the biological neural networks that constitute animal and human brains. Based on layers of connected nodes resembling neurons, neural networks can learn and improve at tasks by processing and adapting to data inputs without task-specific programming.

A breakdown on how Neural Networks work.

Core to AI is machine learning, allowing computer systems to improve at tasks through data exposure rather than traditional coding. Specifically, neural networks offer a powerful machine learning approach by mimicking biological brain architecture. With substantial training data mapping inputs to target outputs, neural networks can discern complex relationships within multidimensional data.

Example

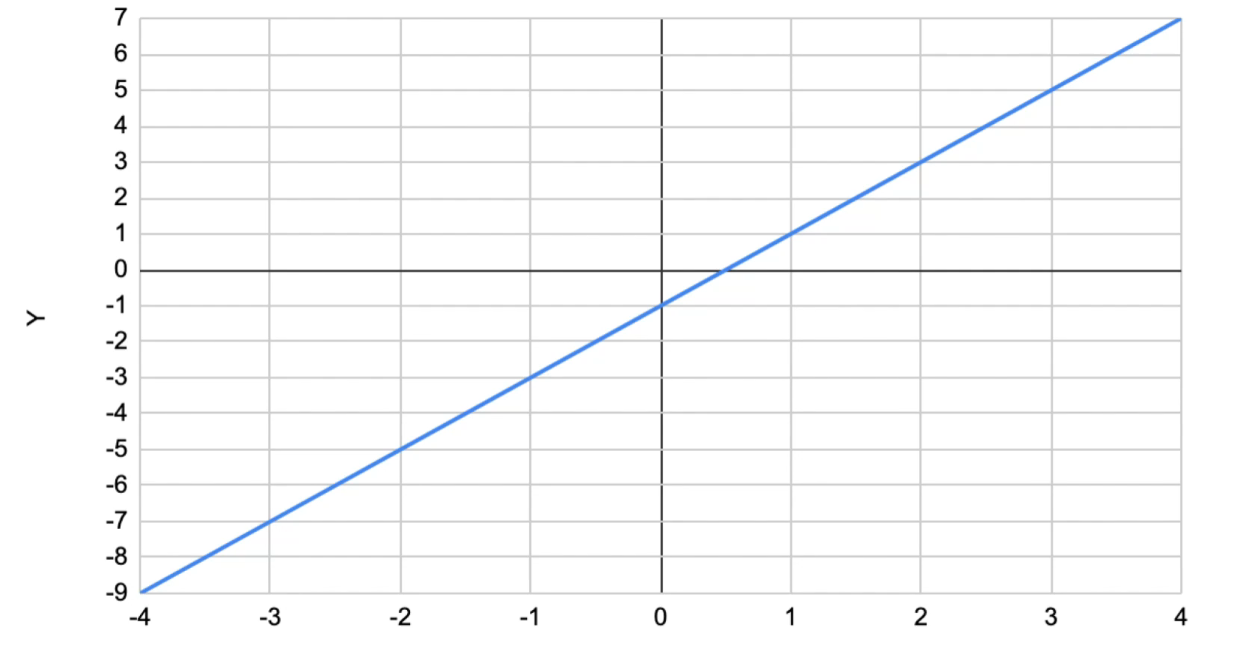

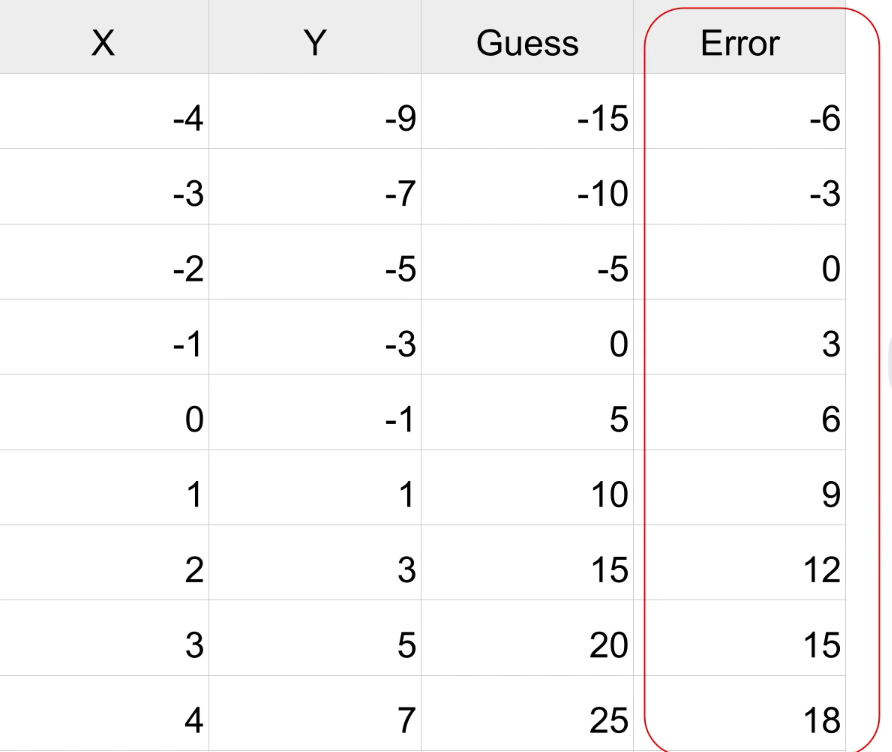

This is a graph of a line. The equation of this graph is Y = WX + B where W is the slope and B is the y-intercept of the line. Therefore this line is going to be y = 2x - 1.

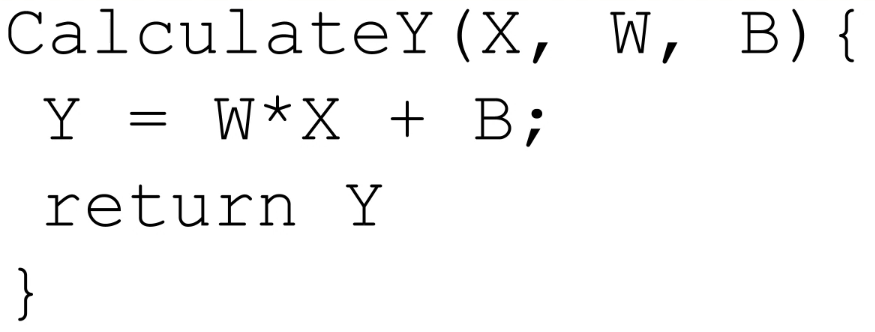

Using code, this can be translated into something called a method. It looks like this:

What the computer does is that is guesses what the X, W, and the B, can be and the answer would become Y. We already know the answer of a line is Y = 2x - 1.

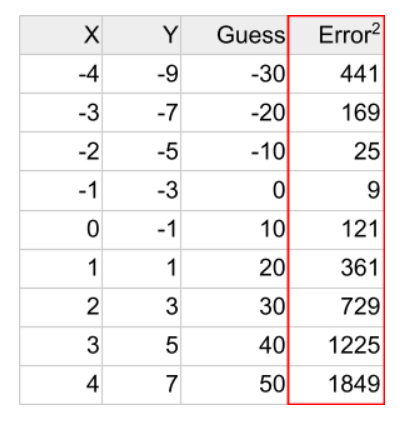

Lets say the computer guesses that W is 10 and the B is 10 (Y = 10X + 10). It will see if the guess what bad or good by checking it with the answer.

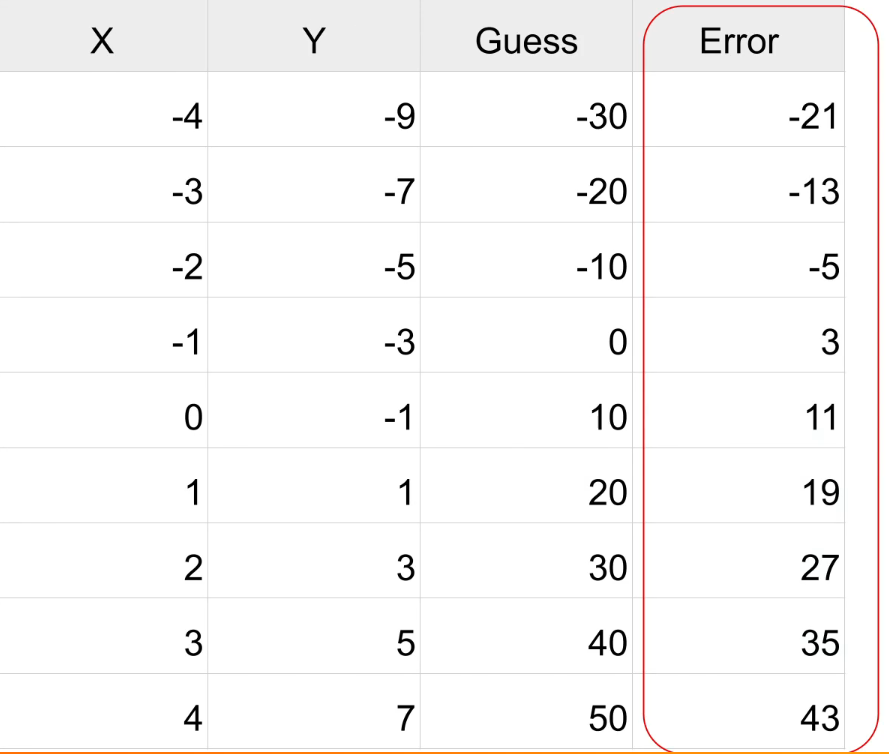

As you can see from the Error column that the guess was way off. Therefore the computer will try again. It is going to guess that the W is 5 and the B is 5. It will look like this: Y = 5X + 5.

That is how neurons work.

How Machine Learning Works

Comparing the first guess and the second guess, you can see that the second guess was better. That is because the error is a lot lower. You can tell by adding up the squared value of every error. If the total error is closer to zero that tells the computer that the second guess is closer. With this repeating, the computer will get to the right answer. The computer is "learning".

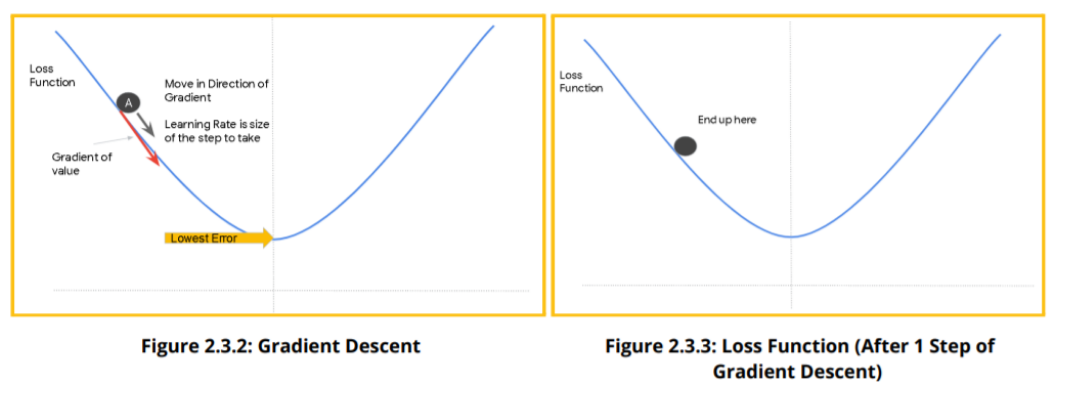

Observe point A in Figure 2.3.2. Consider that A is the value of the loss function with initial random values of W and B. Observe the slope or gradient of the curve at this point. This value tells us the direction to move to reach the point where the error is the lowest. The optimizer then generates another set of values for W and B, which move point A a little closer to the minimum error (Refer to Figure 2.3.3). The size of this step is determined by the learning rate, which the programmer usually sets. If the learning rate is too low, it will take too long to reach the minimum. On the other hand, if the size of the step is too large, the value might overshoot the minimum!

The process of descending along the loss curve to reach the point of minimum error is called Gradient Descent. Note that the figures shown here have only one parameter along the X-axis.

What is Deep Learning?

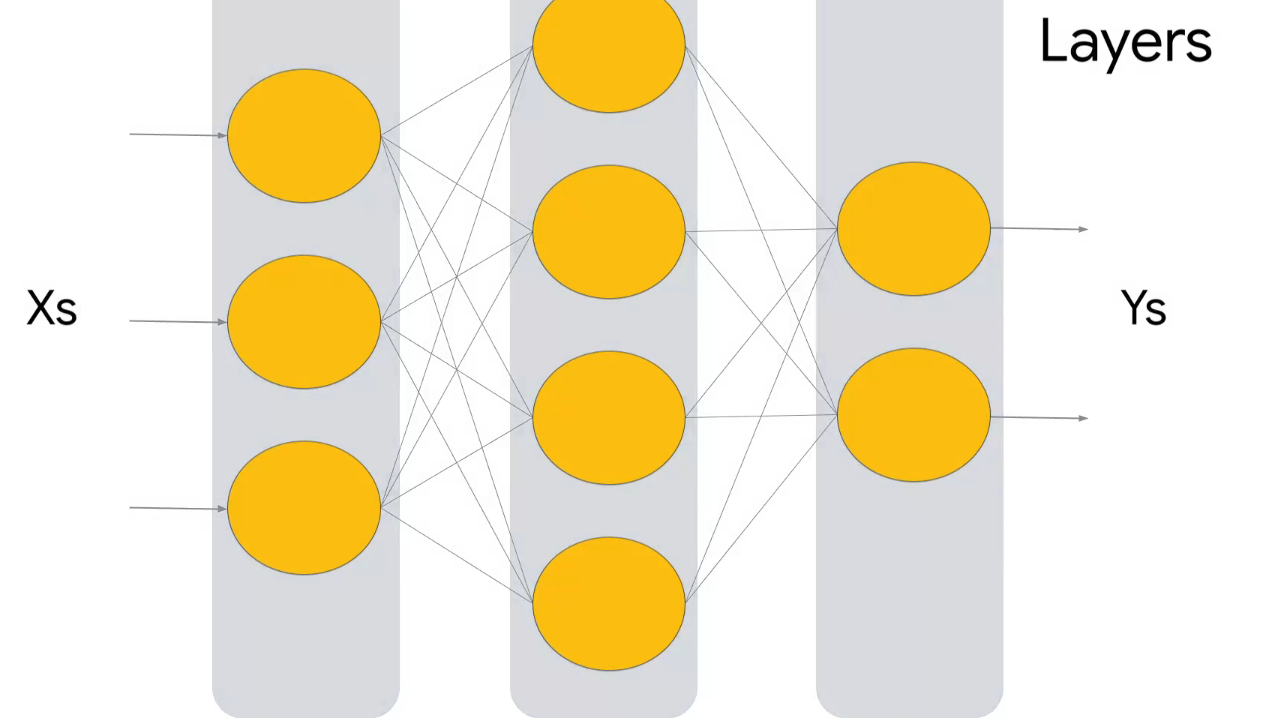

Deep learning is a specialized subset of machine learning based on artificial neural networks composed of multiple layers. With enough layered neural network complexity modeling high-level abstractions in data, deep learning algorithms can learn complex concepts by building them out of simpler ones. Deep learning has enabled unprecedented improvements in accuracy across computer vision, speech recognition, natural language processing, gaming, and many other spheres based on discovering intricate structures within large datasets.

This is an example of deep learning. It has multiples layers (3 in the picture) and every neuron is individually connected to every other neuron in different layers.

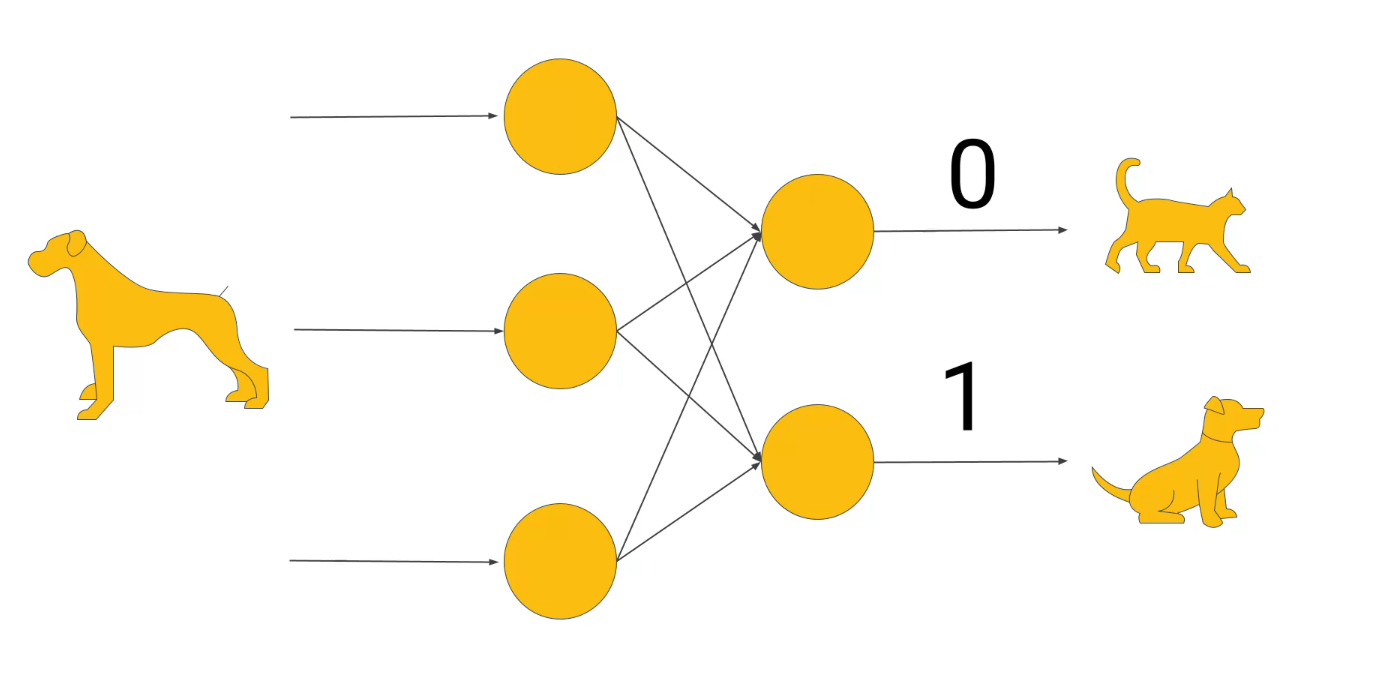

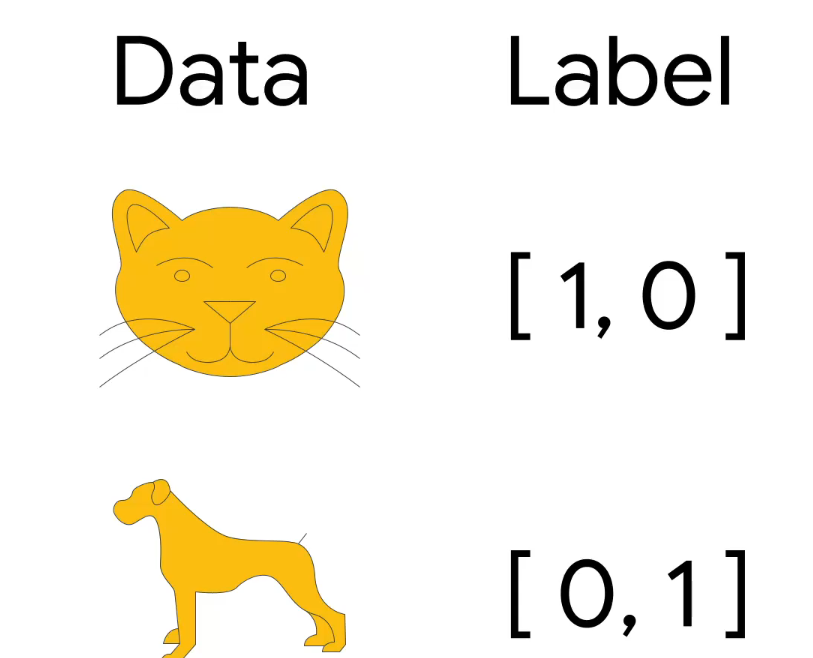

An example is using cats and dogs.

The data is the dog and the neurons work together to piece information and patterns to understand if the data is a dog or a cat. This is called one-hot encoding. It will result into this:

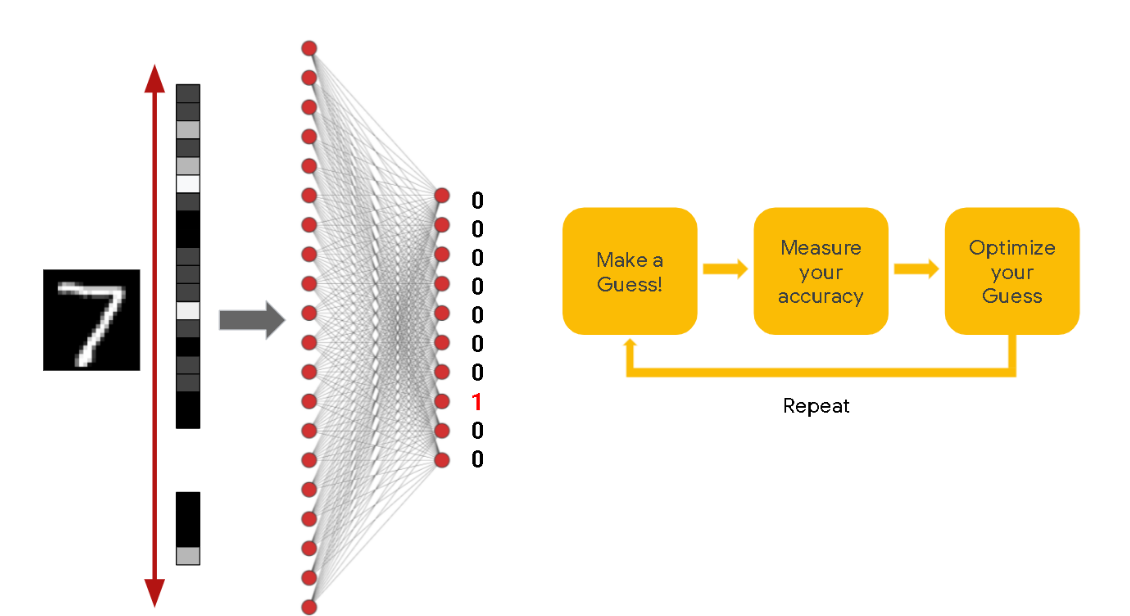

A more complicated model computer vision.

The dataset contains 70,000 image examples with 7,000 samples for each of the 10 digits from 0-9. Each 28x28 pixel image flattens into a 784-pixel vector that can feed into a neural network model. 60,000 of these image vectors can compose the training set for teaching the neural network, while 10,000 samples should be held out to serve as an unbiased validation set for evaluating model performance. Each pixel intensity value in the 784-pixel input vector corresponds to a neuron in the input layer. During neural network training via backpropagation, each neuron feeds forward its activation as input to downstream neurons, comparing the model output to the known label and calculating errors on the validation images to update connection weights. By leveraging 60,000 labeled examples mapping 784 input neurons to known digit classes, the neural network can learn a classifier predicting digit identity based on new image vectors.

Conclusion

Artificial intelligence systems leverage neural networks, computing architectures inspired by the brain, to iteratively learn from data without explicit programming. Through machine learning, AI models like neural networks train on making predictions on datasets, receiving feedback on errors, and using that to remake guesses in pursuit of optimization. This cyclic process of computers autonomously rehearsing, assessing, and refining predictive skills allows AI to expand capabilities by discovering patterns, even if tasks require subtlety beyond hardcoded rules.